Collaboratively Test & Evaluate your DSPy App

Enhancing DSPy development using Autoblocks

DSPy is a framework for algorithmically optimizing LM prompts and weights.

When combined with Autoblocks, it becomes even more powerful.

Throughout this guide, we will show you how to set up a DSPy application and integrate Autoblocks tooling to further tune your AI application.

At the end of this guide, you’ll have:

Set up a functioning DSPy application: Learn how to configure and run a DSPy application optimized for language model enhancement.

Configured an Autoblocks Test Suite: Track experiments and evaluate your application using our easy-to-use CLI and SDK.

Enabled Autoblocks Tracing: Gain insights into the underlying LLM calls to better understand what is happening under the hood.

Created an Autoblocks Config: Remotely and collaboratively manage and update your application’s settings to adapt to new requirements seamlessly.

Note: You can view the full working example here: https://github.com/autoblocksai/autoblocks-examples/tree/main/Python/dspy

Note: We will use Poetry throughout this guide, but you can use the dependency management solution of your choice.

Step 1: Set Up the DSPy App

We will use the DSPy minimal example as a base.

First, create a new poetry project:

Install DSPy:

Create a file named run.py inside the autoblocks_dspy directory. This file sets up the language model, loads the GSM8K dataset, defines a Chain of Thought (CoT) program, and evaluates the program using DSPy.

Now update the pyproject.toml file to include a start script. This makes it easy to run your DSPy application with a single command:

Run poetry install again to register the script:

Set your OpenAI API Key as an environment variable:

Now you can run the application using Poetry:

You should see output in your terminal, indicating that you have successfully run your first DSPy application!

Step 2: Add Autoblocks Testing

Now let's add Autoblocks Testing to our DSPy application so we can declaratively define tests to track experiments and optimizations.

First, install the Autoblocks SDK:

Update the run function in autoblocks_dspy/run.py to take a question as input and return the result. This prepares your function for testing:

Now we’ll add our Autoblocks test suite and test cases. Create a new file inside the autoblocks_dspy folder named evaluate.py. This script defines the test cases, evaluators, and test suite.

Update the run script in pyproject.toml to point to your new test script:

Install the Autoblocks CLI following the guide here: https://docs.autoblocks.ai/cli/setup

Retrieve your local testing API key from the settings page (https://app.autoblocks.ai/settings/api-keys) and set it as an environment variable:

Run the test suite:

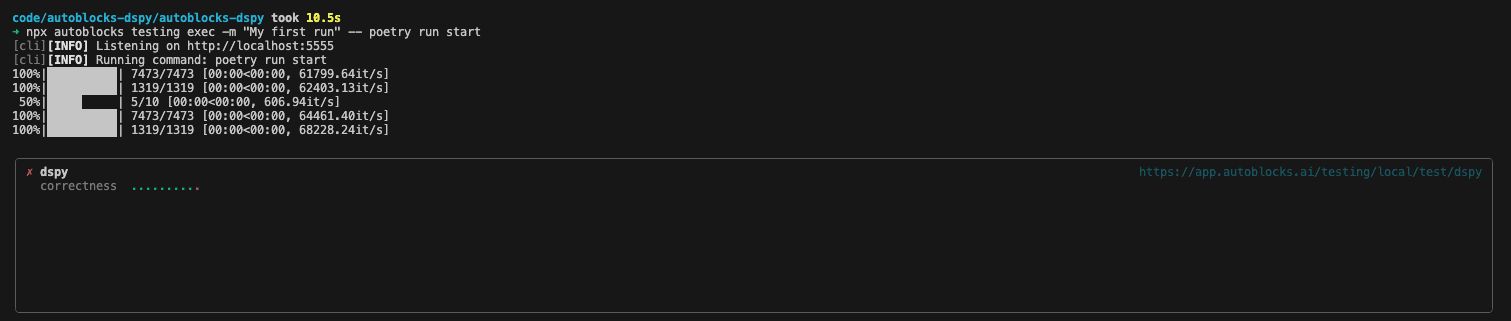

In your terminal you should see:

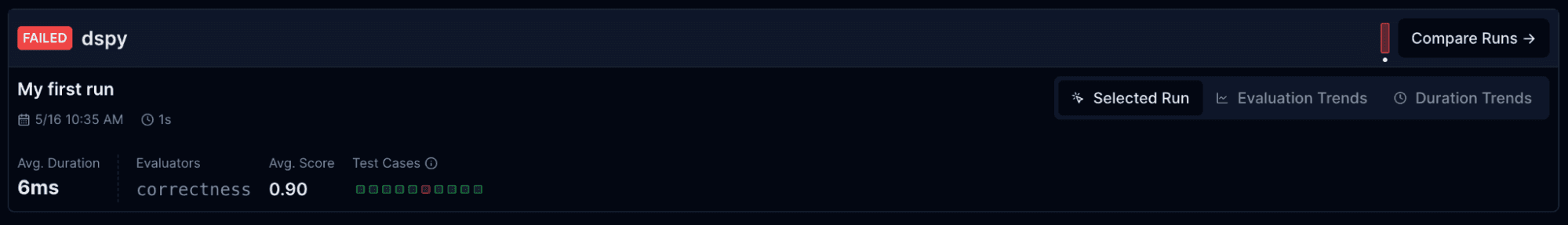

In the Autoblocks Testing UI (https://app.autoblocks.ai/testing/local), you should see your test suite as well as the results:

🎉 Congratulations on running your first Autoblocks test on your DSPy application!

Step 3: Log OpenAI Calls

To gain insights into the LLM calls DSPy is making under the hood, we can add the Autoblocks Tracer.

Update the autoblocks_dspy/run.py file to override the OpenAI client from DSPy. This script logs requests and responses to Autoblocks, helping you trace the flow of data through your AI application:

Retrieve your Ingestion key from the settings page and set it as an environment variable:

Run your tests again:

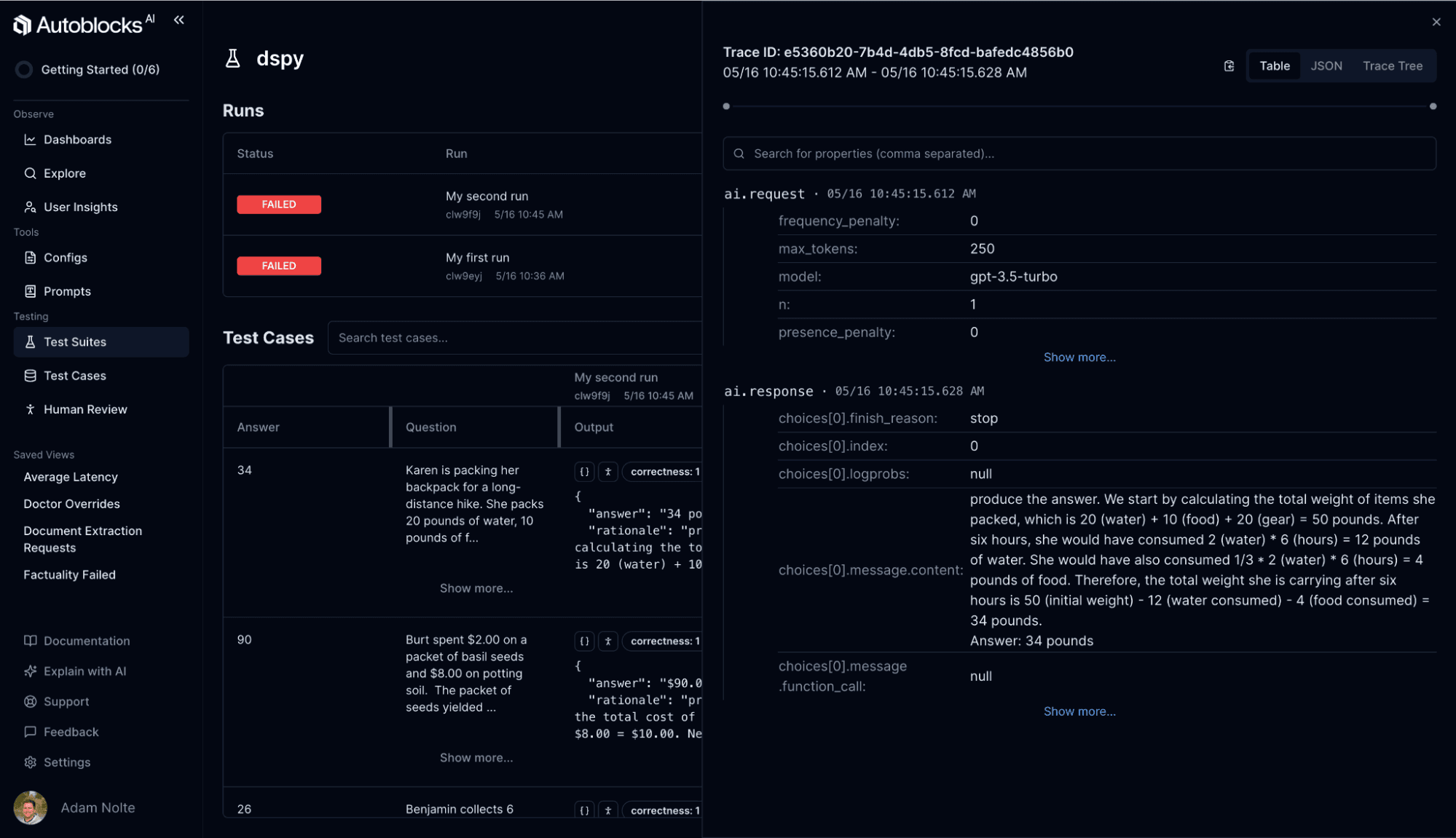

Inside the Autoblocks UI, you can now open events for a test case to view the underlying LLM calls that DSPy made.

Step 4: Add Autoblocks Config

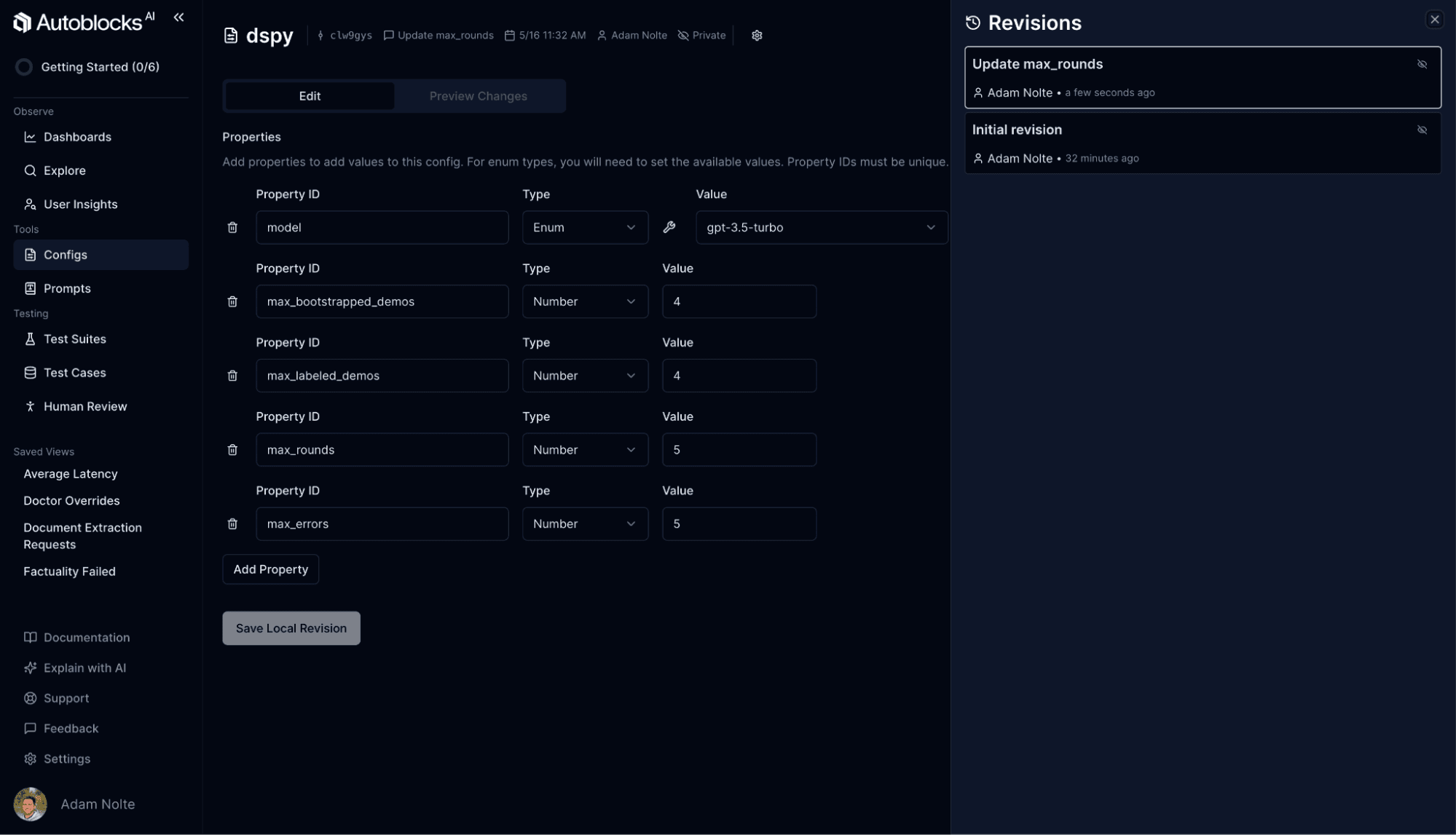

Next, you can add an Autoblocks Config to enable collaborative editing and versioning of the different configuration parameters for your application.

First, create a new config in Autoblocks (https://app.autoblocks.ai/configs/create)

Name your config dspy and set up the following parameters:

Parameter Name

Type

Default

Values

model

enum

gpt-3.5-turbo

gpt-3.5-turbo, gpt-4, gpt-4-turbo

max_bootstrapped_demos

number

4

max_labeled_demos

number

4

max_rounds

number

1

max_errors

number

5

Create a file named config.py inside of the autoblocks_dspy directory. This script defines the configuration model in code using Pydantic and loads the remote config from Autoblocks.

Update autoblocks_dspy/run.py to use the newly created config. This ensures your application uses the configuration parameters defined in Autoblocks:

Run your tests!

You can now edit your config in the Autoblocks UI and maintain a history of all changes you’ve made.

What’s Next?

Congratulations on integrating DSPy and Autoblocks into your workflow!

With this robust setup, you can track and refine your AI applications effectively.

To further enhance your development process and foster collaboration across your entire team, consider integrating your application with a Continuous Integration (CI) system.

The video below demonstrates how any team member can tweak configurations and test your application within Autoblocks—without needing to set up a development environment. This capability not only simplifies the process but also ensures that your AI development is more accessible and inclusive.

About Autoblocks

Autoblocks enables teams to continuously improve their scaling AI-powered products with speed and confidence.

Product teams at companies of all sizes—from seed-stage startups to multi-billion dollar enterprises—use Autoblocks to guide their AI development efforts.

The Autoblocks platform revolves around outcomes-oriented development. It marries product analytics with testing to help teams laser-focus their resources on making product changes that improve KPIs that matter.

About DSPy

DSPy is a framework for algorithmically optimizing LM prompts and weights, especially when LMs are used one or more times within a pipeline.