Introduction

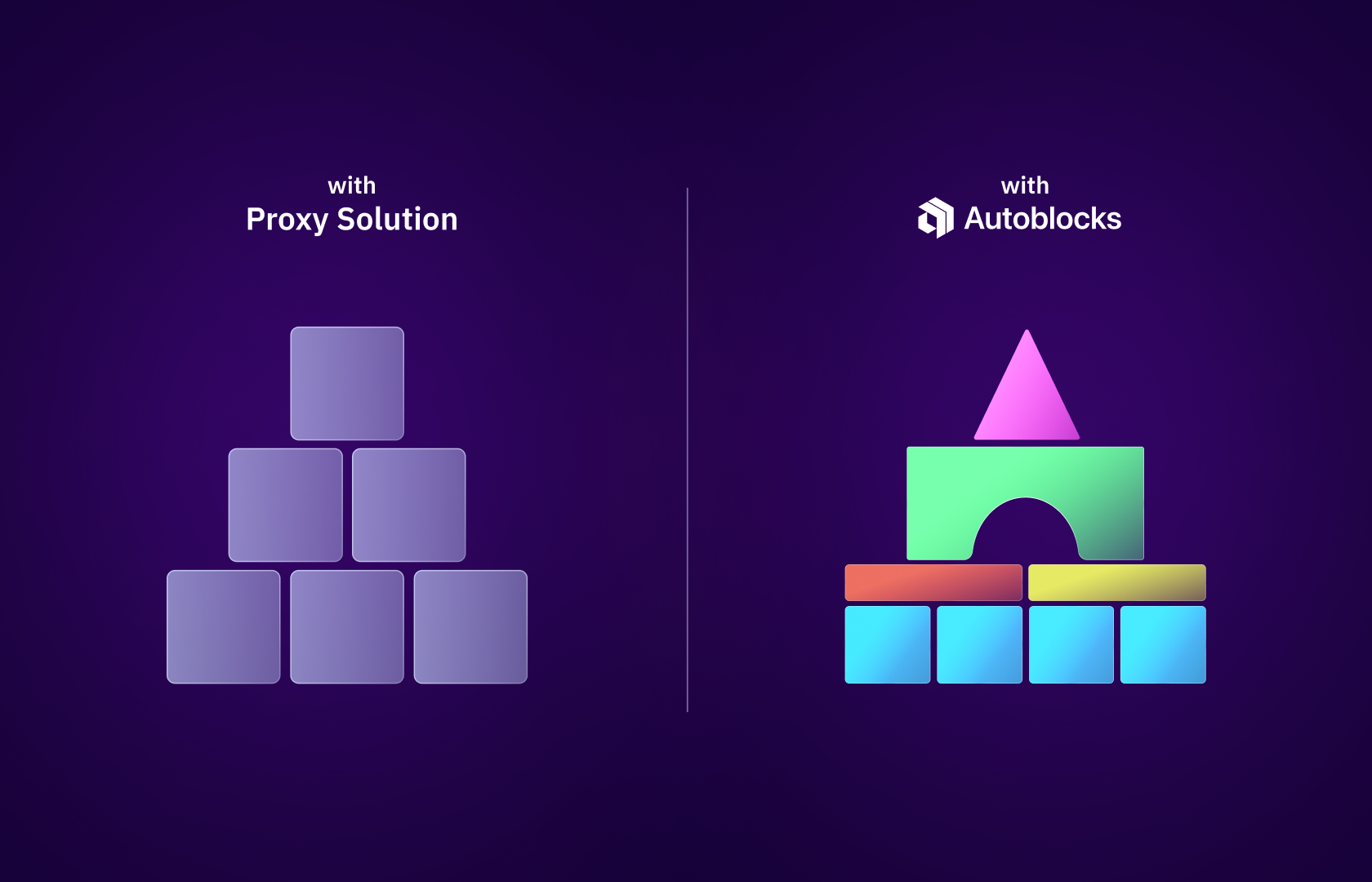

Unlike other LLMOps platforms, Autoblocks was designed to be proxyless by default, meaning we don’t sit between you and your GenAI API providers.

What does it mean to be proxyless by default? Autoblocks is decoupled from any model or framework dependencies. We give users full control over how they build their GenAI products.

This decision has one huge benefit for our users: increased adaptability. By not sitting between you and your API providers, our proxyless solution doesn’t put your product velocity and user experience in jeopardy.

Proxies: Convenient, But Not Adaptable

We get it, proxy solutions are enticing. Because they’re so prescriptive and engrained in your product, they can offer a lot of convenience for prototyping.

However, this convenience comes at a price: adaptability. Although using a no-code or proxy solution might be tempting during the earlier stages of the AI product development lifecycle, product teams require more adaptability as their products mature.

Using an LLMOps platform as a proxy presents several dangers:

- Increased implementation overhead. To implement a proxy, you must rewrite your code and potentially compromise your code quality.

- Higher latency. By adding another service to the middle of your API calls to foundation model providers, you’re adding latency (and hurting user experience).

- Hindered product velocity. When using a proxy, you’re beholden to the development speed of the proxy. If the proxy lags behind the development of any other part of your GenAI stack, including API cloud providers, you’re blocked.

- Downtime risk. If your LLMOps platform gets interrupted, your product experience is directly affected.

- Privacy risk. Proxy solutions touch all of your user inputs and outputs, indiscriminately. This could present privacy risks if certain interactions should be redacted.

Built it Your Way

After talking to hundreds of AI teams, we learned three important insights:

- Every company builds AI products differently. Companies use different models, frameworks, providers, etc. The ways companies integrate GenAI APIs into their codebases vary widely.

- Complexity increases as products mature. As the role of GenAI in a codebase increases, the complexity of those interactions becomes increasingly difficult to manage.

- The GenAI space is moving fast. Tooling and services in the GenAI space are evolving really quickly.

Each of these insights emphasizes the importance of an adaptable LLMOps solution. Your LLMOps solution should mold to your needs, not the other way around.

That's exactly why we designed Autoblocks to be adaptable from day one. As a proxyless solution, Autoblocks is independent of other parts of your codebase.

And we achieve this without sacrificing convenience—our turnkey SDK makes it easy to implement, and our intuitive UI helps you easily unlock value from the platform.

Proxyless by Default vs. Proxyless by Opt-in

Some platforms claim to offer proxyless options, but this can be misleading.

Platforms are typically either proxy by default or proxyless by default.

If a platform is a proxy by default, users who opt for the proxyless option will face limitations. These platforms will always encourage you to use their proxy in order to fully utilize the platform's capabilities.

That’s not the case with Autoblocks. We are built to be proxyless, giving users complete control over their products and allowing them to build them according to their preferences.