Introduction

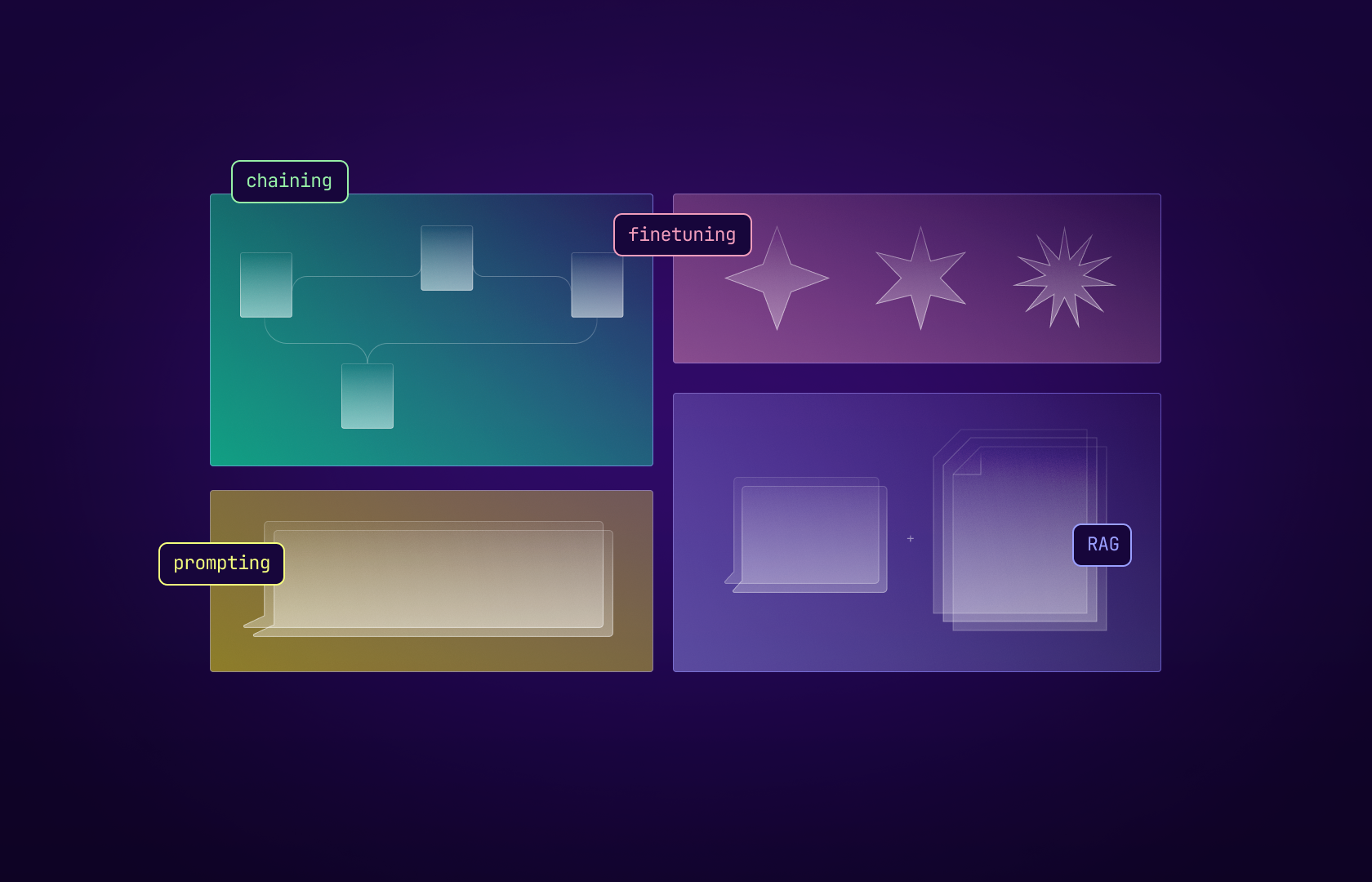

Large language models (LLMs) like GPT-3 have opened up exciting new possibilities for building AI-powered products and services. But with different techniques available—prompting, chaining, fine-tuning, retrieval-augmented generation (RAG)—it can be unclear when to use each. Based on your goals, data, and resources, this post will guide when to use prompting vs. fine-tuning vs. RAG vs. chaining.

Different Approaches

Prompting

Prompting involves providing a few-shot example to an off-the-shelf LLM like GPT-3 to induce a desired behavior without updating the model's parameters. Prompting is great when:

- You want quick iteration without training a model.

- You have limited data and resources

- Your use case is simple enough to be handled through examples alone.

Prompting allows rapidly building prototypes and MVPs. However, it may not work well for complex use cases or those requiring external knowledge. Prompting also relies on keeping the examples consistent across queries.

Fine-Tuning

Fine-tuning involves training an LLM on your data to create a custom model adapted to your use case. Fine-tuning shines when:

- You have sufficient high-quality training data.

- Your use case is complex and requires external knowledge.

- You want reliable, consistent behavior across queries.

Fine-tuning allows the creation of highly customized models with specialized knowledge. However, it requires more data, computing resources, and expertise than prompting. Fine-tuned models can also overfit if not trained carefully.

RAG

RAG combines an LLM with an external knowledge source like a document collection or database. The LLM can retrieve and incorporate external knowledge on the fly. RAG helps when:

- Your use case relies heavily on external knowledge.

- You want to leverage existing knowledge bases or documents.

- You want to combine closed-domain precision with open-domain creativity.

RAG allows access to external knowledge without training it into the model. However, the retriever and LLM components require optimization and integration. The performance also depends on the coverage & quality of the external knowledge source.

Chaining

Chaining involves connecting multiple LLMs, each model providing input to the next. Chaining is useful when:

- You want to combine the strengths of different LLMs.

- You need interpretability by dividing tasks across models.

- You want to integrate diverse skills and capabilities.

Chaining allows for building ensemble systems with complementary strengths. However, it requires carefully orchestrating each model's capabilities and optimizations. There can also be compounding errors.

Conclusion

The appropriateness of prompting, chaining, fine-tuning, or RAG depends on your use case. Prompting is great for rapid prototyping, while fine-tuning enables fully custom models. RAG offers potential benefits if your use case relies on external knowledge. Chaining allows the combining of diverse models.

Assess your needs and constraints to decide which technique makes the most sense. The exciting advances in LLMs provide a toolkit; your job is determining which tool is right for the task.