An issue I’ve had to deal with at every startup I’ve ever worked is the risk that someone pressing a button in a UI somewhere breaks an application currently running in production. This risk exists for pretty much any third-party service with a browser-based UI that you integrate into your codebase. With these types of providers, your code makes assumptions about what is available. For example, your code might assume there is a:

AWS DynamoDB table called

mytableLaunchDarkly flag called

myflagPlanetScale DB where there is a

namecolumn in yourusertable

The problem is that all of these providers give you access to UIs with buttons that allow you to break these assumptions and bring down your entire application — or worse, to make a change that breaks an assumption in a more subtle way, leaving your application humming along but in an undefined state.

For obviously destructive changes, mature providers like the above have safeguards in place that attempt to prevent catastrophe:

These issues are also why infrastructure as code (IaC) has become so popular, and for good reason: you are able to declare — in code — that certain resources exist before depending on them. With IaC, you then don’t need to give most users any UI access at all.

We can never truly remove these risks while relying on a third-party tool, but there is usually some minimum amount of protection these types of providers can offer to prevent users from accidentally making changes that are incompatible with the current state of their application.

How does this relate to prompt management?

As I’m sure you’re aware, there are these things called LLMs, and you interact with them via prompts, which are just strings in the end. You’ll likely start out managing all of your prompts in your codebase via string interpolation or something similar, but then realize:

managing a bunch of large strings in code is not a very nice experience

team members who don’t interact with the codebase (product managers, designers) are really good at writing and iterating on prompts but don’t have direct access to update them

A/B testing a slight variation to the prompt involves awkward copy-pasting or if-else logic on an unwieldy data structure like a large string

you want to deploy small prompt changes without redeploying your entire application

you want to track performance of various metrics as you make changes to your prompts

Managing prompts in a UI-based tool

After you realize managing prompts in code creates too many challenges, you’ll find a provider that allows you to manage prompts in a UI, browser-based tool. Kind of like the tools mentioned earlier, where your code makes assumptions about what is available… 👻

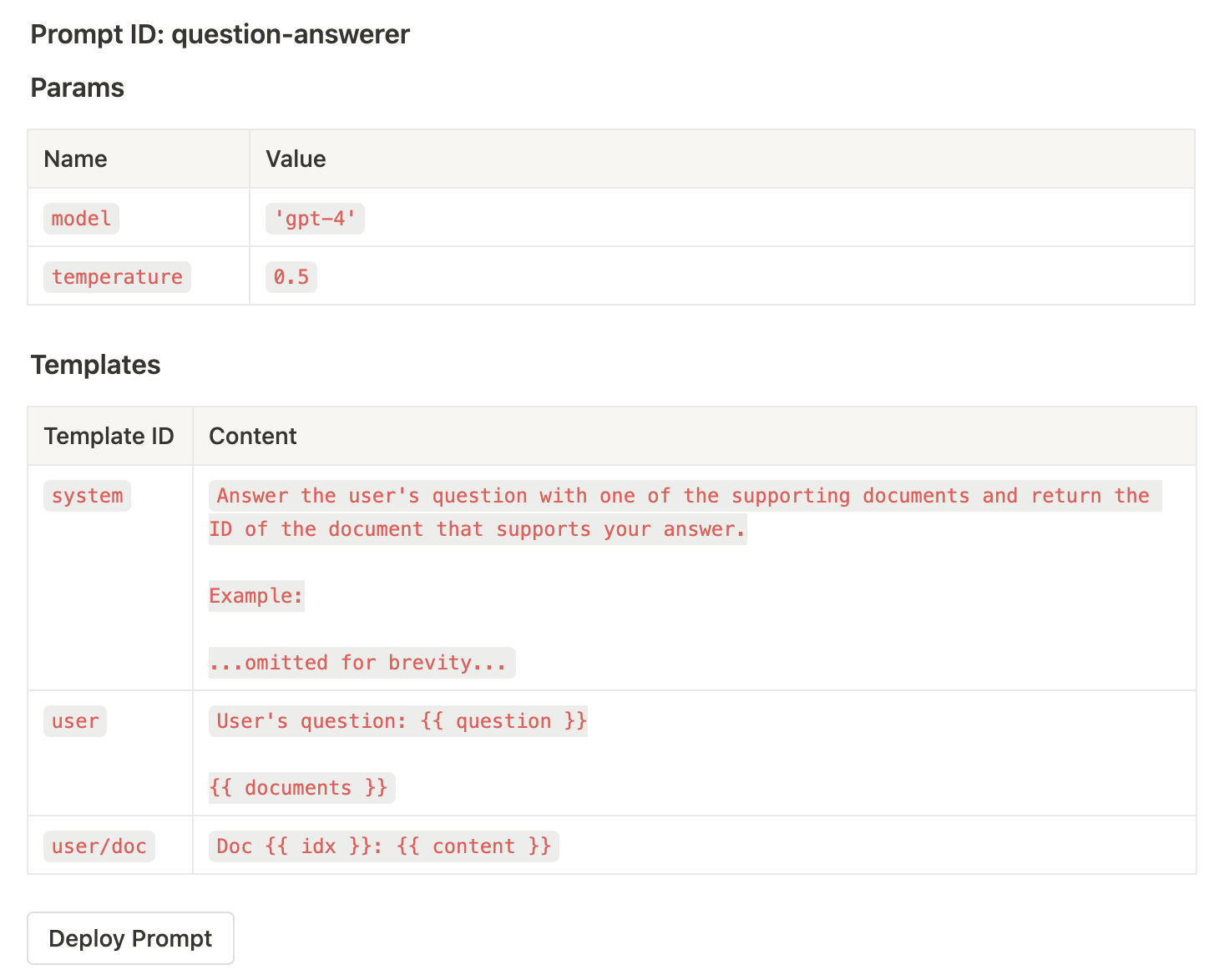

Pretend you’ve created the below prompt within this imaginary provider’s UI:

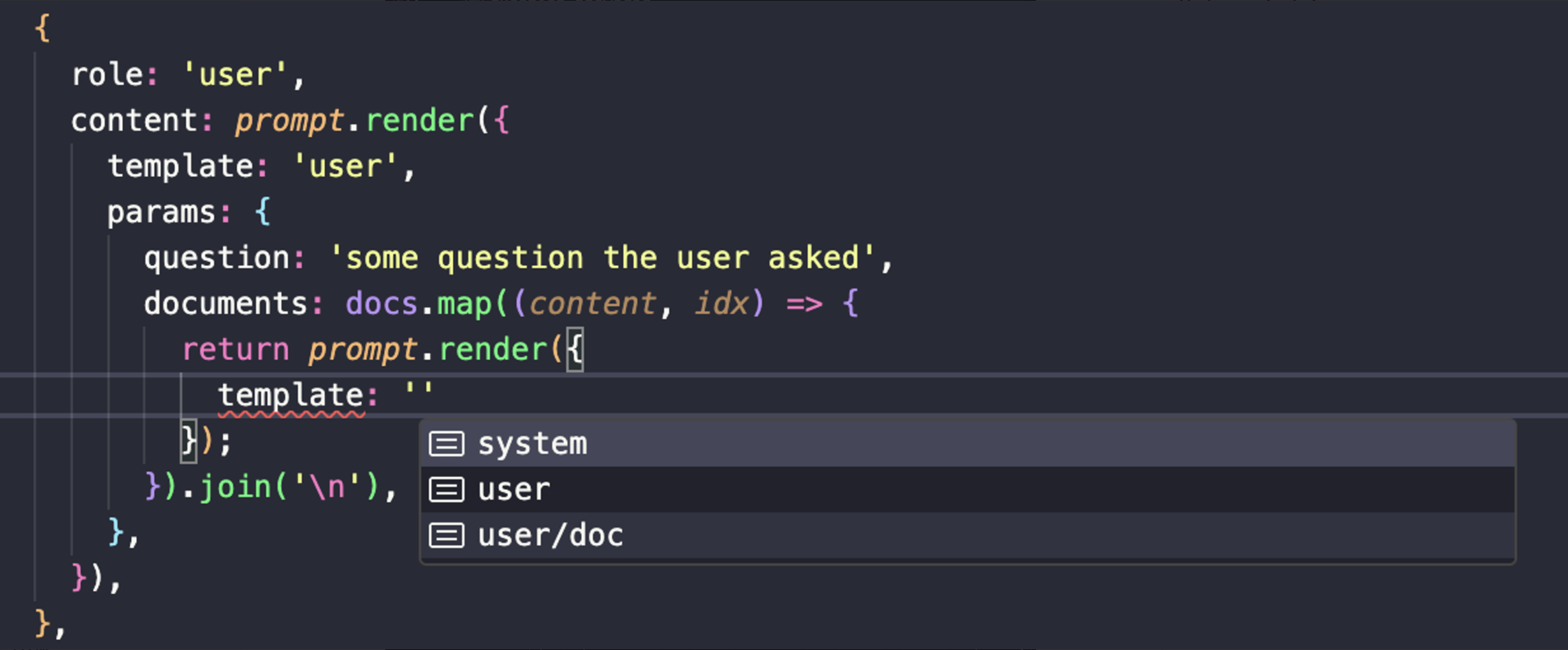

Many providers don’t even allow you to have multiple templates per prompt like this, so the example above and code snippet below already isn’t possible in most tools today (though it is in ours!). They would make you create multiple “prompts” for each template, each of which would be tracked separately, even though in the end they’re all used together to make a single request to an LLM model. 🤯

In the simplest implementation, a provider would give you a way to pull this information down at runtime via the prompt ID and an interface for substituting placeholder values:

import { SDK } from 'someprovider';

const sdk = new SDK();

const prompt = await sdk.get('question-answerer');

// Retrieved via some RAG process

const docs = ['doc a', 'doc b'];

const response = await openai.chat.completions.create({

model: prompt.params.model,

temperature: prompt.params.temperature,

messages: [

{

role: 'system',

content: prompt.templates.system.render(),

},

{

role: 'user',

content: prompt.templates.user.render({

question: 'some question the user asked',

documents: docs.map((content, idx) => {

return prompt.templates['user/doc'].render({

idx: idx + 1,

content,

});

}).join('\n'),

}),

},

],

});

OK great. Now you have access to a few important benefits:

the large text pieces of your prompts are no longer in your codebase

non-technical stakeholders can now participate

you can deploy changes to your prompts without doing a code deploy

But not so fast…

The last feature, while very useful, is where you’re practically guaranteed to run into issues with a provider that doesn’t protect you from deploying incompatible changes while your code is still referencing an old identifier. As soon as someone renames the template user/doc to user-doc, or renames the idx template parameter to i, your application will either:

start returning 500s to users because you’re accessing a template that no longer exists

continue “working” but use the wrong template parameter name, leading to poor user experiences because the rendered prompt you’re sending to the LLM is now nonsensical

You can “be really careful” and “over-communicate” and say “please don’t change the name of a template parameter”, but in my experience, especially at startups moving quickly, this is likely to break down.

This would be like giving the entire product team access to a UI where they can make destructive changes to a production database with the click of a button. 😱

Our assumption at Autoblocks is that teams building AI products won’t want to take this risk, especially for something as important as prompts, which will presumably be somewhere on the critical path of your users’ experience with your application.

Prompt Templates ≈ Schemas

When you boil it down, prompts and their templates are really just a schema. Again, using the example above, its schema would look something like:

interface QuestionAnswerer {

params: {

model: string;

temperature: number;

};

templates: {

system: {};

user: {

question: string;

documents: string;

};

"user/doc": {

idx: string;

content: string;

};

};

}Engineers are already very protective of their database schema, with many tools existing today to safely interact with and migrate schemas to prevent backwards-incompatible changes.

Why shouldn’t we have that same safety for prompts, which are changed way more often than a database schema?!

Prompts have schemas – your tools should act like it

When designing our prompt SDK, I drew a lot of inspiration from the excellent tools we use at Autoblocks for interacting with and managing our database schema:

PlanetScale — protects us from incompatible changes via safe migrations

Prisma — autogenerates a fully-typed TypeScript ORM

Safe Prompt Deploys

Similar to PlanetScale’s safe migrations, we’ve come up with a system that allows product teams to safely deploy changes to their prompts from a UI without the risk of making a change that is not compatible with their currently-deployed application.

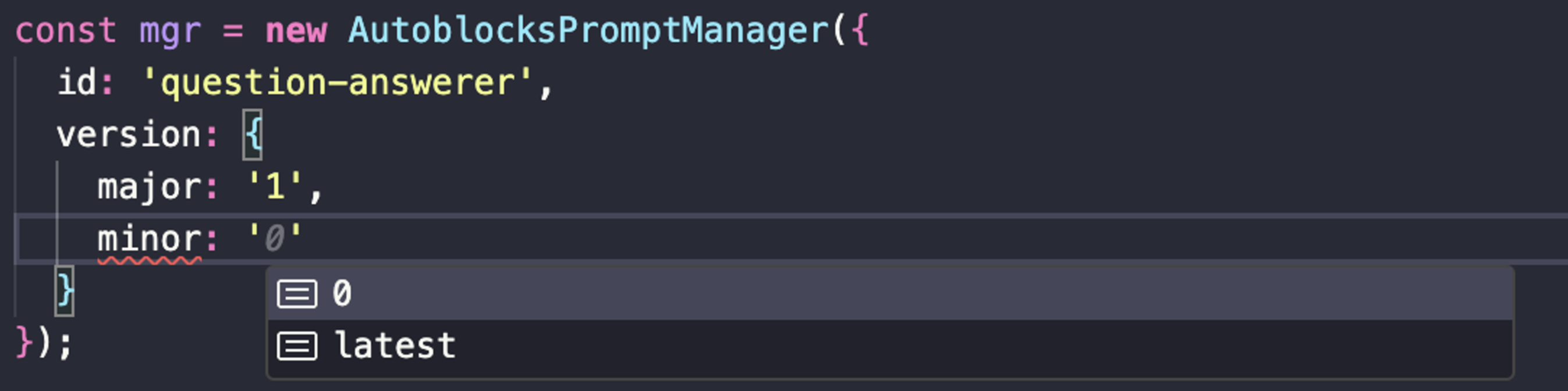

We do this via an automated major + minor versioning system where we force you to deploy a new major version if you modify the prompt’s schema.

Another way to think about this is that a major version corresponds to a snapshot of your prompt’s schema. If you make a change that doesn’t affect the schema, e.g. changing the text within one of the templates but not its parameters, then deploying that change will only bump the minor version.

Then, when using our SDK, you must pin the major version while the minor version is allowed to be "latest":

const mgr = new AutoblocksPromptManager({

id: "question-answerer",

version: {

major: "1", // must be pinned

minor: "latest", // can be pinned or "latest"

},

});

const response = await mgr.exec(({ prompt }) => {

return openai.chat.completions.create({

model: prompt.params.model,

temperature: prompt.params.temperature,

messages: [

{

role: "system",

content: prompt.render({

template: "system",

params: {},

}),

},

{

role: "user",

content: prompt.render({

template: "user",

params: {

question: "some question the user asked",

documents: docs

.map((content, idx) => {

return prompt.render({

template: "user/doc",

params: {

idx: `${idx + 1}`,

content,

},

});

})

.join("\n"),

},

}),

},

],

});

});With the above configuration, our SDK will use whatever the latest 1.x version is when you execute a prompt:

Latest deployed version of

| Which version will be used with the config

|

|---|---|

1.0 | 1.0 |

1.1 | 1.1 |

1.2 | 1.2 |

2.0 | 1.2 |

2.1 | 1.2 |

This allows you to make non-schema changes to your prompts from the Autoblocks UI without redeploying your entire application.

But as soon as you make a modification to the prompt that changes its schema, our UI will force you to deploy a new major version. Your application running in production will continue using the latest 1.x version until an engineer opens a pull request to update the major version to 2, which would also include the code changes necessary to be compatible with the new schema.

Here is an example of what this process looks like in each SDK:

Fully-Typed Prompt Client

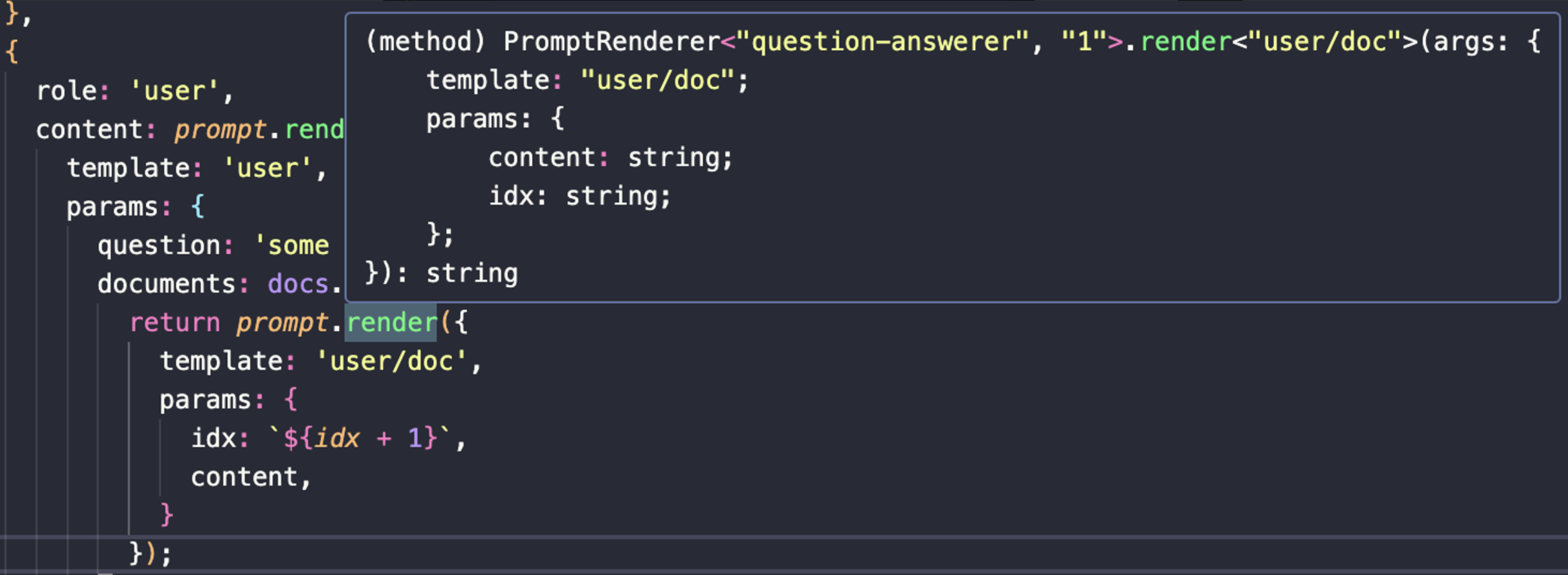

While we could have shipped our prompt SDKs without type safety, we get so much value from type safety during day to day development that it would have hurt a little bit to ship something to users where the types are things like Record<string, any> for the model parameters or Record<string, string> for the template parameters. While some mistakes would be caught by testing, it’s a much nicer developer experience to use fully-typed clients that are aware of your schema and to be able to rely on static type checking to catch any major issues.

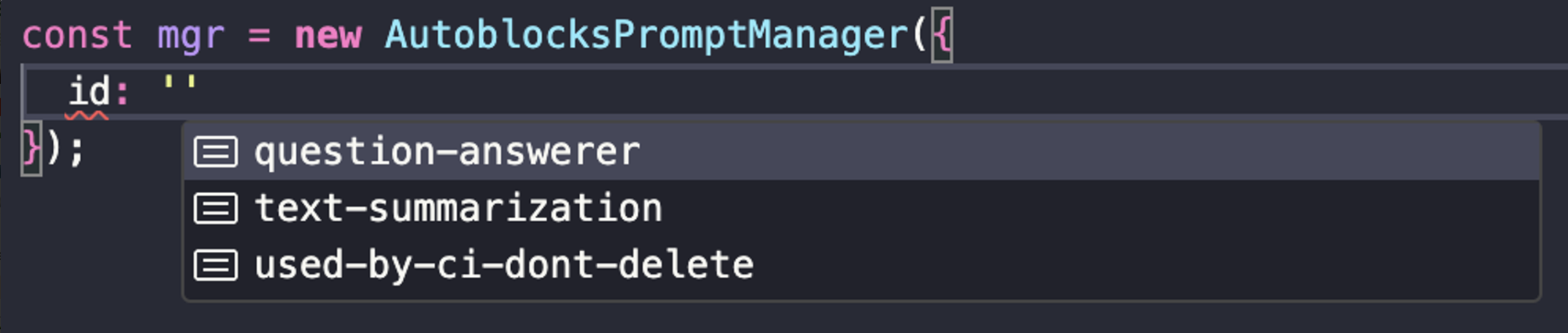

Similar to Prisma’s prisma generate CLI that autogenerates a fully-typed client tailored to your database schema, both of our SDKs ship with CLIs that will either autogenerate types (for TypeScript) or autogenerate code (for Python) tailored to your prompts’ schemas.

For our Python SDK, since there’s no such thing as compile-time type safety (unless you’re using something like mypy), we just generate actual code for you with methods whose signatures match the expected template names and their parameters.

For example, I created the prompt from the example above in Autoblocks and then ran autoblocks prompts generate in a TypeScript project:

➜ npm exec autoblocks prompts generate

✓ Compiled in 246ms (11 prompts)I now have a fully-typed prompt client that’s aware of:

all of the prompt IDs available

all of the major and minor versions available for each prompt

each prompt’s template IDs

each template’s expected parameters

We think this is the best way to keep everyone on the team happy: non-technical people get access to prompts and developers don’t have to settle for a poor developer experience.

Try out Autoblocks Prompt SDK

I hope our approach to prompt management resonates with you and helps you manage prompts safely between technical and non-technical team members.

You can get started with our Prompt SDK today for free by visiting app.autoblocks.ai.

If you’re interested in learning more, visit our docs: docs.autoblocks.ai/features/prompt-sdk