In the rapidly evolving world of generative AI, one of the most persistent challenges faced by developers and product teams is scalable evaluation of AI outputs. LLM judges have shown early promise, but their reliability can be hit-or-miss.

Today, Autoblocks is introducing a novel solution to make automated evaluation reliable: Self-Improving LLM Judges, a new feature that automatically improves the accuracy of LLM judges based on expert feedback.

Self-Improving LLM Judges help teams scale subjective assessments of LLM app output quality, while significantly reducing the cost of manual expert evaluations.

The Challenge: Reliable AI Evaluation at Scale

As LLM systems increasingly get integrated into applications and grow in complexity, the need for scalable (and accurate) evaluation methods has never been more pressing.

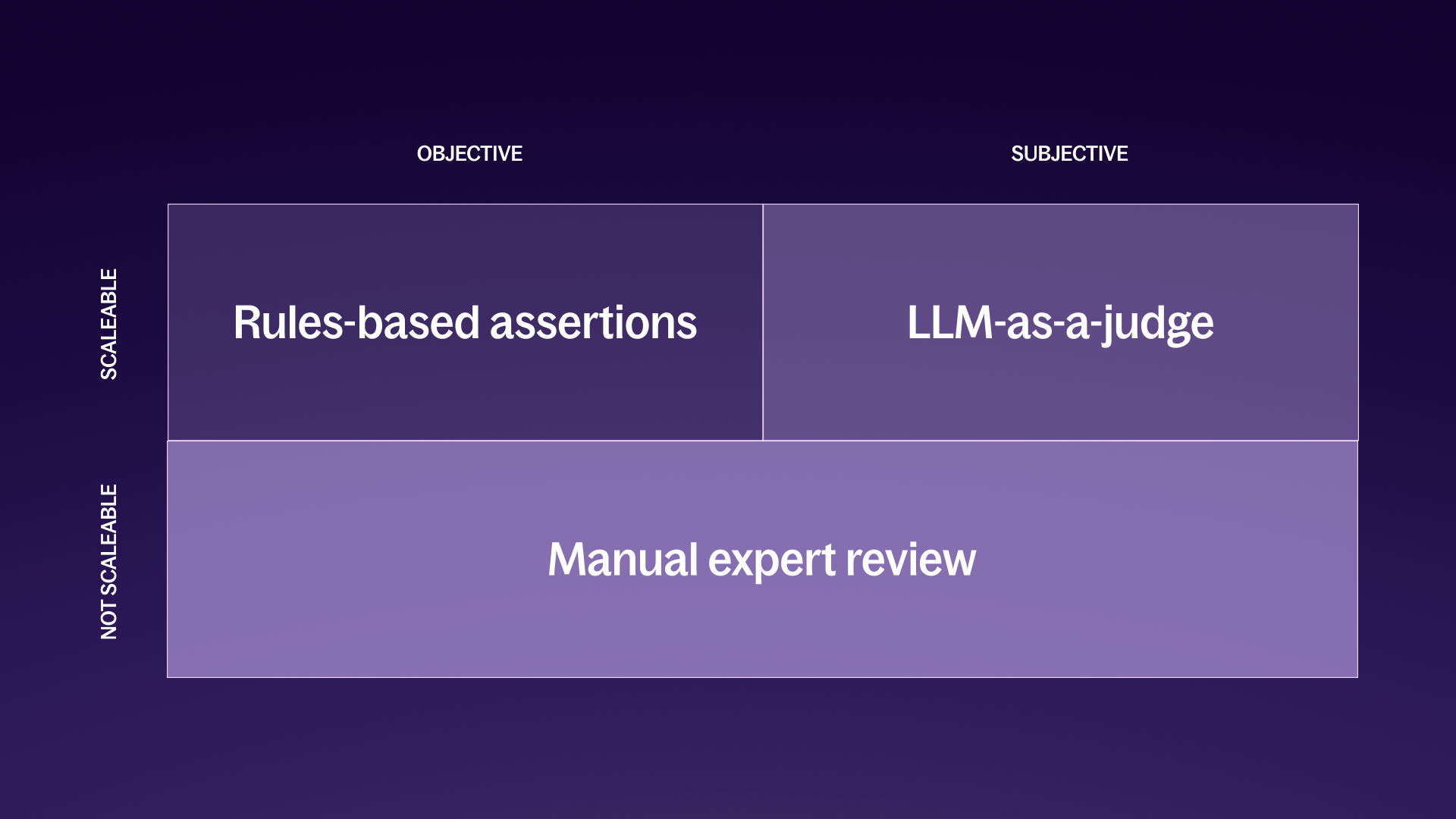

However, many teams find themselves caught between two suboptimal choices:

Manual expert reviews, which are accurate but time-consuming and difficult to scale.

Objective evaluation methods, which are fast and scalable but often miss the nuances of language and context.

Over the past few months, we've seen a spike in the use of "LLM-as-a-judge" systems – using one LLM to evaluate the output(s) of another. While promising, this approach has been riddled with inconsistency. Some teams try to combat this by continuously refining their LLM judge prompts, but it’s an ambiguous task that quickly gets deprioritized.

The big question remains: “who’s evaluating the [LLM-as-a-judge] evaluators?”

Enter Self-Improving LLM Evaluators

Autoblocks customers, especially those in highly regulated industries like healthcare, require a high degree of reliability for their evaluation systems.

Today, we’re introducing Self-Improving LLM Judges in Autoblocks: a significant leap forward in building reliable AI evaluation workflows.

Here's why it's a game-changer:

Adaptive Learning: Our system doesn't just evaluate; it learns and improves over time based on human feedback. This means your evaluation criteria become more accurate and aligned with your preferences without constant human intervention.

Reduced Prompt Engineering: Say goodbye to endless tweaking of LLM judge prompts. Our system starts working with basic prompts and automatically adapts them based on your team’s feedback.

Scalability with Accuracy: Our solution combines the scalability of automated systems with the nuance of human reviewers.

Seamless Integration: Easy to set up and integrate into your existing workflows, with results viewable directly in the Autoblocks UI.

Ultimately, reliable LLM judges help teams move faster and with confidence. It also saves teams money by reducing the overhead of expert reviewers and LLM judge failures.

How It Works

Here's how Self-Improving LLM Judges work in Autoblocks:

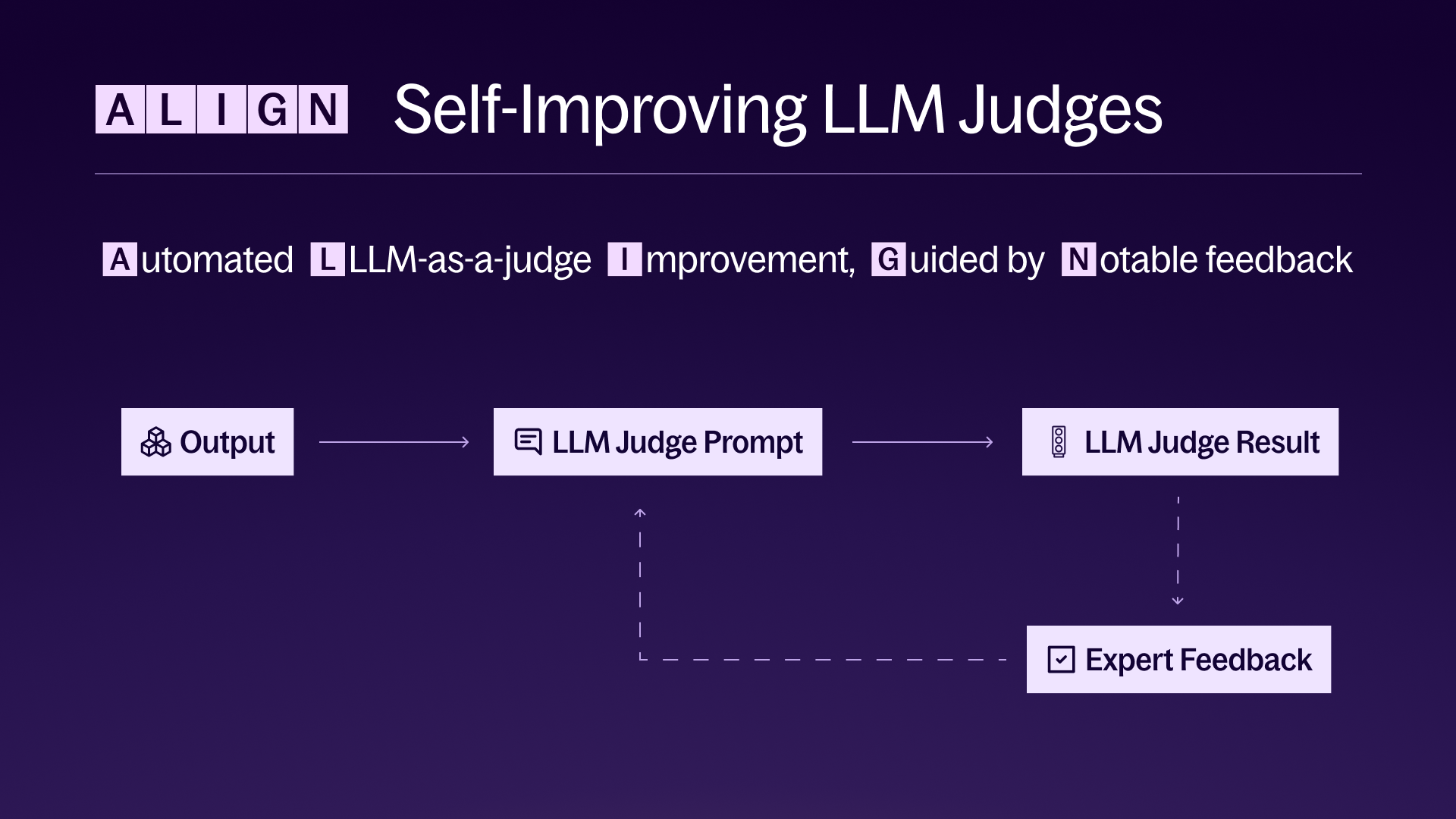

Manage your LLM-as-a-judge prompts using Autoblocks, and incorporate them into your codebase.

Run your AI outputs through our evaluation system.

Review the results in Human Review Mode and provide feedback on LLM judge outputs.

Watch as the system incorporates your feedback into LLM judges, improving their accuracy over time.

We call this technique for improving LLM judge performance ALIGN: Automated LLM-as-a-judge Improvement, Guided by Notable feedback.

The concept of systematically aligning LLM judges with expert preferences was heavily inspired by the work of Shreya Shankar, J.D. Zamfirescu-Pereira, Ian Arawjo, and others titled “Who Validates the Validators? Aligning LLM-Assisted Evaluation of LLM Outputs with Human Preferences.”

Real-World Impact

Imagine being able to confidently evaluate thousands of AI outputs for hallucinations, correctness, toxicity, or any other criteria you define – and having that evaluation system continuously improve itself based on your team's expertise.

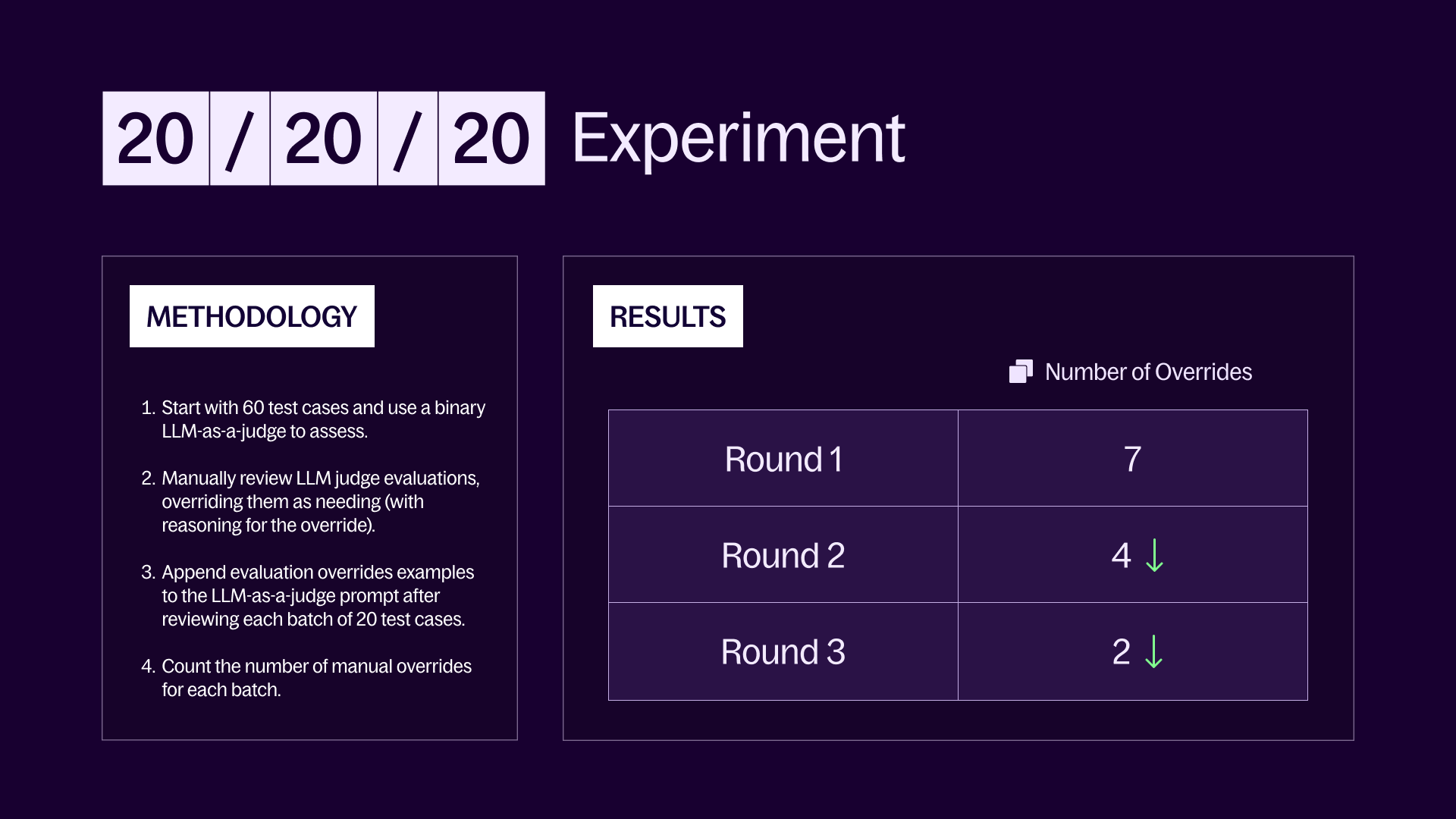

One of our early adopters, a Series C healthcare startup using LLMs, reported a 75%+ improvement in the accuracy of a critical LLM judge after just one month of using Self-Improving LLM Judges.

This improvement in accuracy corresponded to a significant reduction in time spent on costly manual evaluation by experts.

Get Started Today

At Autoblocks, we believe that the key to advancing AI technology lies not just in developing more powerful models, but in creating robust, reliable ways to evaluate and improve them. Self-Improving LLM Judges are a significant step towards this goal.

By providing AI teams with a tool that evolves and improves alongside their AI systems, we're not just solving today's evaluation challenges - we're building a foundation for the continuous improvement of AI technologies well into the future.

The future of AI evaluation is here, and it's continuously improving.

Get started with Autoblocks today by visiting app.autoblocks.ai.